How to connect to Google DialogFlow in Dialogue Studio

With this scenario you will integrate your DialogFlow (Google) Natural Language Understanding (NLU) with AnywhereNow Dialogue Studio. This means you can integrate a smart assistant into your IVR Interactive Voice Response, or IVR, is a telephone application to take orders via telephone keypad or voice through a computer. By choosing menu options the caller receives information, without the intervention of a human operator, or will be forwarded to the appropriate Agent. that can handle questions, such as case information, and intelligent routing based on the users speech input. AnywhereNow Dialogue Studio contains specific nodes that can connect with Dialogflow (Please see the nodes explanation under components for more details).

Using a smart assistant in your IVR adds an extra layer that can gather information. The assistant can route your users to specific skills based on their voice input or provide a self-service experience.

Preparation

This scenario will make use of Google Dialogflow.

DialogFlow can be configured at https://dialogflow.cloud.google.com/ .

Intents are basically the goal of the users input, what does the user want to achieve, such as ‘I need help with insuring my new car’ or ‘I got a question about my current ticket’. Create a DialogFlow with at least two or three specific intents, start off with 3 intents that are not too similar. Per intent you need around 15-20 training phrases (more is better but too much can make your assistant too specialized in one intent). Each intent should have around the same amount of training phrases.

Entities are specific topics, such as the type of insurance. You can define synonyms which the assistant can recognize (instead of car you could introduce car brands). The content of the entity does not determine the intent, but the presence of an entity will. Don’t forget to highlight the entities in the training phrases, if it doesn’t do it automatically.

Prerequisites

For this scenario the following prerequisites must b in place:

-

A UCC A Unified Contact Center, or UCC, is a queue of interactions (voice, email, IM, etc.) that are handled by Agents. Each UCC has its own settings, IVR menus and Agents. Agents can belong to one or several UCCs and can have multiple skills (competencies). A UCC can be visualized as a contact center “micro service”. Customers can utilize one UCC (e.g. a global helpdesk), a few UCC’s (e.g. for each department or regional office) or hundreds of UCC’s (e.g. for each bed at a hospital). They are interconnected and can all be managed from one central location. configured with Dialogue Studio

-

DialogFlow Agent configured:

Quickstart: Create, train, and publish your DialogFlow -

Transcription configured,.

For more information, see: How to configure UCC speech to text transcription

Configuration

Bot greeting

Our first step is initiate the bot, greet the customer and wait for their response.

We are going to start with a Incoming conversation node. This node only listens to both audio sessions. This node is connected to our server using SignalR.

Steps:

-

Drag and drop Incoming Call Node

-

Open Node

-

Select / Configure server

-

Filter on:

Audio/Video

-

Next is greeting and asking what their question is the customer, this can be done with a Say node. In here you can type the text, this will be converted to speech using the a Speech engine (See: Speech Engines for AnywhereNow).

Steps:

-

Drag and drop a Say Node

-

Open Node

-

Enter text you want to play

-

-

Connect end of the Incoming Call node with begin of the Say Node

Start Transcriptor

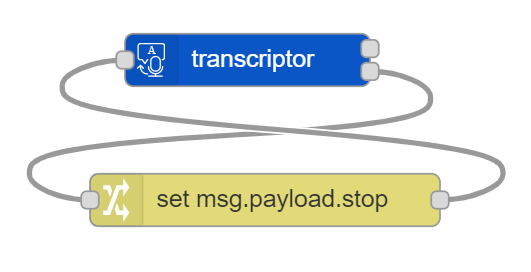

For the Voice-bot we want to listen to the customer. This can be done with the Transcriptor Node

Steps:

-

Drag and drop a Transcriptor node

-

Open Node

-

Optional change the language of the node

-

-

Connect the end of the Say node (or option otherwise of the switch node) with begin of the Transcriptor node.

By default the Transcriptor node will keep listening to the customer and restarting the flow. This is useful when integrating with natural language understanding API, which can hold an conversation (for example DialogFlow). In this case we need to stop after the first output. This can be done stopping the transcriptor with a stop payload.

Steps:

-

Drag and drop Change node

-

Open Node

-

Create rule

-

Set:

msg.payload.stopto:

true(Boolean)

-

-

-

Connect "events with transcriptor" end with begin of the Change node

-

Connect end of the Change node with begin of the Transcriptor node

Finally we need to store the output in an object we can use later. This can be done with a Change node.

Steps:

-

Drag and drop a Change node

-

Open Node

-

Create rule

-

Set:

msg.payloadto:

msg.payload.transcriptor.transcript

-

-

-

Connect "events with transcriptor" end with begin of the Change node

Configure DialogFlow

Next is the DialogFlow node, the node that connects your IVR to the smart assistant. This nodes needs to be configured, but the AnywhereNow Dialogue Studio presents the information to the creator. To configure this node correctly you need a working DialogFlow assistant and access to the Google cloud console. Also set the identifier to msg.session.id this will make sure the user will stay in the same session and does not start over after one run through DialogFlow.

Steps:

-

Drag and drop a DialogFlow node

-

Open Node:

-

Agent = Select your DialogFlow configuration

If you haven't got an configuration yet, you can add a new configuration.

If you haven't got an configuration yet, you can add a new configuration.

-

Login to Service accounts – IAM & Admin – <your project name> – Google Cloud console

-

In the Project dropdown, select the project of the Dialogflow Agent.

-

If you do not have a Service account yet, click on Create Service Account .

Else click on a Service account from the list. -

Switch to the Keys tab

-

Click on Add Key, select JSON, and click Create.

-

The JSON file will now be downloaded, you can copy/paste the content in JSON field of the Dialogflow config node.

-

-

Session =

msg.session.id -

Language = Select the language of the bot.

-

-

Connect the output of the previous node(s) (Change node) to the begin of the Function node.

Now whenever a user says something in the IVR, this message is sent to DialogFlow which detects the intent, entities and if needed further dialogue to obtain the information that is needed to route the user to the correct Skill or handle their questions.

Handle response

Now that we have our response, we need to handle appropriate. First we need to store the output in an object we can use later. This can be done with a Change node.

Steps:

-

Drag and drop a Change node

-

Open Node

-

Create 2 rules

-

Set:

msg.completedto:

msg.payload.diagnosticInfo.fields.end_conversation.boolValue

-

Set:

msg.intentto:

msg.payload.intent.displayName

Note

Additionally if you have parameters you can find them in:

-

-

-

Connect end of the DialogFlow node with begin of the Change node

With this information you can introduce a Switch node, which lets you route the flow based on e.g. intents, parameters or entities. In this example we will switch on intent.

Steps:

-

Drag and drop a Switch node

-

Open node

-

Change Property to

msg.intent -

Add an option per intent, for example:

==

ticket_status -

Add the option otherwise, in the case the DialogFlow didn't detect the intent.

-

-

Connect end of the Change node to begin of the Switch node

You can now continue with your flow, based on the intent of the customer. Below some scenarios you can encounter when using DialogFlow.

(Optional) Play DialogFlow Response

In DialogFlow you can also write responses when an Intent has been detected or a prompt when a parameter is missing. These responses can be used by Dialogue Studio, this is done using a Say node.

Steps:

-

Drag and drop a Say node

-

Open node

-

Change to expression

-

Use the following expression:

-

-

Connect end of previous node to begin of the Say node

(Optional) Handle end of DialogFlow conversation

In DialogFlow you can set if a conversation is completed. This will tell you if you need to send another message to DialogFlow or if you can continue with you own flow. When handling the response we stored the complete state in msg.complete. Now we need to check the value, this can be done with a Switch node.

Steps:

-

Drag and drop a Switch node

-

Open node

-

Change Property to

msg.complete -

Add an option to check if completed

is

true -

Add the option otherwise, in the case the DialogFlow isn't finished yet.

-

-

Connect end of the previous node to begin of the Switch node

If not completed you can continue with (Optional) Send another response

(Optional) Handle missing parameter

In DialogFlow you can configure that some parameters are required. For example when you want the schedule a meeting, in that case you need to know the date and time.

An intent with missing parameters, will be marked as incomplete (msg.complete is false). To verify our parameter we need to check the value, this can be done with a Switch node.

Steps:

-

Drag and drop a Switch node

-

Open node

-

Change Property to

msg.payload.parameters.fields.[parametername].stringValue -

Add an tow options to check if the parameter is filled

is empty

is not empty

-

-

Connect end of the previous node to begin of the Switch node

If missing, you can continue with (Optional) Send another response

(Optional) Handle unknown intent

When DialogFlow cannot detect the intent, it will select the "Default Fallback Intent". This will also contain a response, which can be send to the customer. This will trigger the customer to send another response. By default this will be marked as an incomplete conversation (msg.complete is false).

During fallback, you can continue with (Optional) Send another response

(Optional) Send another response

When making a Voice-bot, you don't need to do anything. The Transcriptor node (added in Start Transcriptor) keeps listening and sends a new output to DialogFlow. (unless you have stopped you transcriptor, in that case you need to start it again)