How to configure a simple Voice Bot (with Azure OpenAI) in Dialogue Studio

This article will guide you through the process of creating a Voice Bot with Dialogue Studio. We will walk through the steps to set up a simple voicebot that uses the Azure OpenAI API to process the customers question and provide an appropriate response. We will also discuss best practices for optimizing the dialogue flow, so that the voicebot can better understand customer needs and provide a more personalized experience.

Preview

Prerequisites

-

Completed the steps in How to connect to Azure OpenAI in Dialogue Studio and the Azure OpenAI configuration is stored in Dialogue Studio

-

Transcription configured, Learn More

Configure

Follow the steps below to configure a simple Voice Bot in Dialogue Studio:

-

Login to your Dialogue Studio environment

-

Open or Create a Tab where you want to add the Simple Voice Bot

-

From the menu in the top right, select “Import” and add the following JSON.

CopyJSON[{"id":"34f63c505a6d5f3b","type":"tab","label":"Azure OpenAI Voicebot","disabled":false,"info":"","env":[]},{"id":"baf409270cd6f17e","type":"group","z":"34f63c505a6d5f3b","name":"Simple Chat from Azure Open AI","style":{"label":true},"nodes":["d01ee3d818befcfd","a946bf11f405fd58","e6279e32e72e89b3"],"x":1154,"y":399,"w":632,"h":82},{"id":"96b8970d.bdafb8","type":"any-red-say","z":"34f63c505a6d5f3b","name":"","text":"\"Hey there, I am the Anywhere 3 6 5 voice bot,\" &\t\"You are now talking to Azure Open A I,\" &\t\"What do you want to ask?\"","dataType":"jsonata","saymethod":"Default","voice":"","x":760,"y":320,"wires":[[]]},{"id":"b1c459ca.01f638","type":"any-red-transcriptor","z":"34f63c505a6d5f3b","name":"","enable":true,"culture":"en-US","final":true,"x":710,"y":440,"wires":[[],["a35e189a.8d03a8","b95f28a1.0cb078"]]},{"id":"b95f28a1.0cb078","type":"any-red-say","z":"34f63c505a6d5f3b","name":"","text":"\"Ok, asking Azure Open A I: \" & payload.transcriptor.transcript","dataType":"jsonata","saymethod":"Default","voice":"","x":990,"y":440,"wires":[["a946bf11f405fd58"]]},{"id":"a35e189a.8d03a8","type":"change","z":"34f63c505a6d5f3b","name":"","rules":[{"t":"set","p":"payload.stop","pt":"msg","to":"true","tot":"bool"}],"action":"","property":"","from":"","to":"","reg":false,"x":700,"y":520,"wires":[["b1c459ca.01f638"]]},{"id":"5711dde4.ce89e4","type":"any-red-say","z":"34f63c505a6d5f3b","name":"","text":"\"Here is what Azure Open A I responded: \" & payload","dataType":"jsonata","saymethod":"Default","voice":"","x":1930,"y":440,"wires":[["bcc1ca14.e7fe08"]]},{"id":"21a6ba68.62df26","type":"any-red-incoming-call","z":"34f63c505a6d5f3b","name":"","config":"b3706e2bf304a970","filtertype":"audiovideo","x":420,"y":440,"wires":[["96b8970d.bdafb8","b1c459ca.01f638"]]},{"id":"bcc1ca14.e7fe08","type":"any-red-say","z":"34f63c505a6d5f3b","name":"","text":"You will now be disconnected","dataType":"str","saymethod":"Default","voice":"","x":2190,"y":440,"wires":[["ef9fb053.12346"]]},{"id":"ef9fb053.12346","type":"any-red-action","z":"34f63c505a6d5f3b","name":"","sweetName":"Empty action","actionType":"disconnect","fromSessionId":"session.id","fromDataType":"msg","toSessionId":"fromSessionId","toDataType":"msg","skill":"","skillDataType":"str","sip":"","sipDataType":"str","agentSips":"","agentSipsDataType":"str","config":"b3706e2bf304a970","questionsfilter":"","questionid":"","x":2390,"y":440,"wires":[]},{"id":"7132f188.c4c3","type":"comment","z":"34f63c505a6d5f3b","name":"Todo: Configure UCC","info":"Select a UCC in the Incoming call node or create a configuration for your UCC.","x":380,"y":400,"wires":[]},{"id":"bf9ab164.50b33","type":"comment","z":"34f63c505a6d5f3b","name":"The transcriber needs to be stopped, else it will continue transcribing.","info":"","x":880,"y":400,"wires":[]},{"id":"278fc976.6b7e56","type":"comment","z":"34f63c505a6d5f3b","name":"This Say will add the output from Open AI in a sentence","info":"","x":2000,"y":380,"wires":[]},{"id":"d01ee3d818befcfd","type":"http request","z":"34f63c505a6d5f3b","g":"baf409270cd6f17e","name":"","method":"POST","ret":"obj","paytoqs":"ignore","url":"","tls":"","persist":false,"proxy":"","insecureHTTPParser":false,"authType":"","senderr":false,"headers":[],"x":1510,"y":440,"wires":[["e6279e32e72e89b3"]]},{"id":"a946bf11f405fd58","type":"function","z":"34f63c505a6d5f3b","g":"baf409270cd6f17e","name":"Prepare request for OpenAI","func":"const url = global.get(\"AzureOpenAI-Config.url\");\nconst key = global.get(\"AzureOpenAI-Config.apiKey\");\nconst message = msg.payload.transcriptor.transcript;\n\nmsg.headers = {\n \"Content-Type\": \"application/json\",\n \"api-key\": key\n};\n\nmsg.url = url;\n\n// Main JSON structure, referencing the variables\nmsg.payload = {\n \"messages\": [\n {\n \"role\": \"system\",\n \"content\": \"You are an AI assistant that helps people find information.\"\n },\n {\n \"role\": \"user\",\n \"content\": message\n } \n ],\n \"temperature\": 0.7,\n \"top_p\": 0.95,\n \"n\": 2,\n \"stream\": false,\n \"max_tokens\": 4096,\n \"presence_penalty\": 0,\n \"frequency_penalty\": 0\n};\n\nreturn msg;","outputs":1,"timeout":"","noerr":0,"initialize":"","finalize":"","libs":[],"x":1300,"y":440,"wires":[["d01ee3d818befcfd"]]},{"id":"e6279e32e72e89b3","type":"change","z":"34f63c505a6d5f3b","g":"baf409270cd6f17e","name":"","rules":[{"t":"set","p":"payload","pt":"msg","to":"payload.choices[0].message.content","tot":"msg"}],"action":"","property":"","from":"","to":"","reg":false,"x":1680,"y":440,"wires":[["5711dde4.ce89e4"]]},{"id":"b3706e2bf304a970","type":"any-red-config","active":true,"ucc":"ucc_michael","priority":"0"}] -

Open the Incoming Call and Select or Configure a server, for more information see: Create your first flow

-

To test your voicebot, initiate a phone call with the UCC A Unified Contact Center, or UCC, is a queue of interactions (voice, email, IM, etc.) that are handled by Agents. Each UCC has its own settings, IVR menus and Agents. Agents can belong to one or several UCCs and can have multiple skills (competencies). A UCC can be visualized as a contact center “micro service”. Customers can utilize one UCC (e.g. a global helpdesk), a few UCC’s (e.g. for each department or regional office) or hundreds of UCC’s (e.g. for each bed at a hospital). They are interconnected and can all be managed from one central location. and enter say the question you want to ask Azure Open AI. You should then receive a response from the voicebot.

-

If you need to customize or extend the voicebot, you can open the Tab, modify the nodes and responses as needed, and save your changes.

-

Test the voicebot again to make sure it works.

-

Congratulations, you have now successfully configured a simple Voice Bot in Dialogue Studio!

Explanation

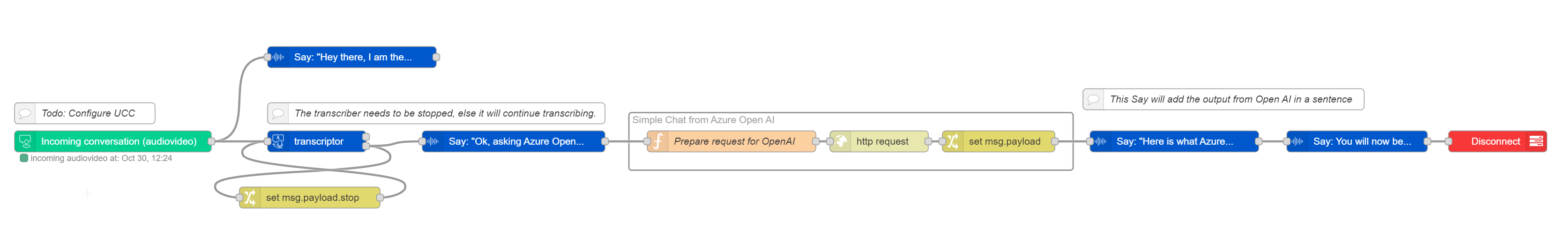

Below we will explain specific parts in the flow.

Configure the incoming call

The first node in the flow is an Incoming Call node, which will enable the listener to receive an incoming call.

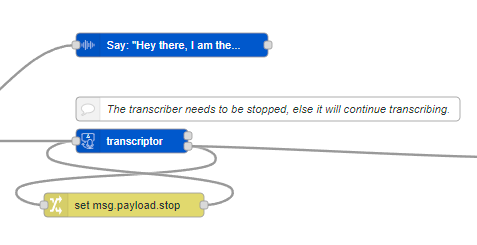

Greet the customer and start the transcriptor

The Say node will greet the customer and start a parallel Transcriptor node to record their conversation. The Transcriptor node will output a transcript and then be stopped, so it does not keep transcribing indefinitely.

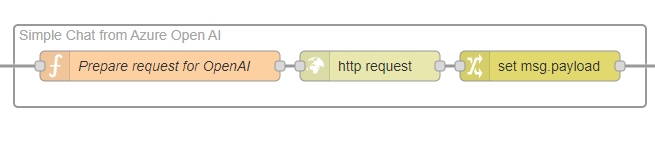

Send the transcription to be analyzed

The output of the transcript will be placed in the payload, along with other intent examples. The headers and URL will be filled in. Afterward, an HTTP request node will be used to send the request.

This example we send the transcript of the customer to Azure Open AI.

const url = global.get("AzureOpenAI-Config.url");

const key = global.get("AzureOpenAI-Config.apiKey");

const message = msg.payload.transcriptor.transcript;

msg.headers = {

"Content-Type": "application/json",

"api-key": key

};

msg.url = url;

// Main JSON structure, referencing the variables

msg.payload = {

"messages": [

{

"role": "system",

"content": "You are an AI assistant that helps people find information."

},

{

"role": "user",

"content": message

}

],

"temperature": 0.7,

"top_p": 0.95,

"n": 2,

"stream": false,

"max_tokens": 4096,

"presence_penalty": 0,

"frequency_penalty": 0

};

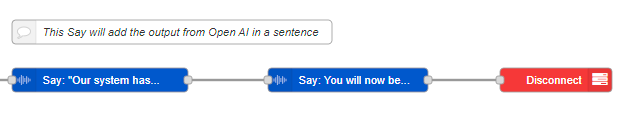

return msg;Inform the customer about the output

The output of the HTTP Request node is then passed to an Say node, which will inform the customer about the output from OpenAI. Finally, the flow passes the output to an Action node, which will disconnect the call.